David Bruce

Engineering Manager, General Industry & Automotive Segment

FANUC America Corp.

Machine vision (MV) is a technology that continues to grow both in terms of its raw capabilities and ability to add value to many different industries, including manufacturing. This is due primarily to the increasing capability and lower cost of the computing hardware power required to execute MV applications. Today, there are several MV companies providing value to a wide range of manufacturing operations, from automotive to logistics and everywhere in between.

From 2009 to 2019, the total financial transactions for the North American MV market grew from less than $1.1 billion to over $2.7 billion, an average growth rate of 15%.

MV uses various algorithms to modify and analyze 2D digital images to automatically segment, detect, locate, measure, classify, etc. objects within an image. The classic MV application is defect detection, which can be done at over 1000 parts per minute, providing real-time, 100% inspection of every product produced, not just one in every 100 or 1000. This achievement is a direct result of the incredible processing speeds available with modern computer hardware. It’s a truly remarkable revolution that has far-reaching consequences, especially in the food and pharmaceutical industry, where mistakes can be life threatening.

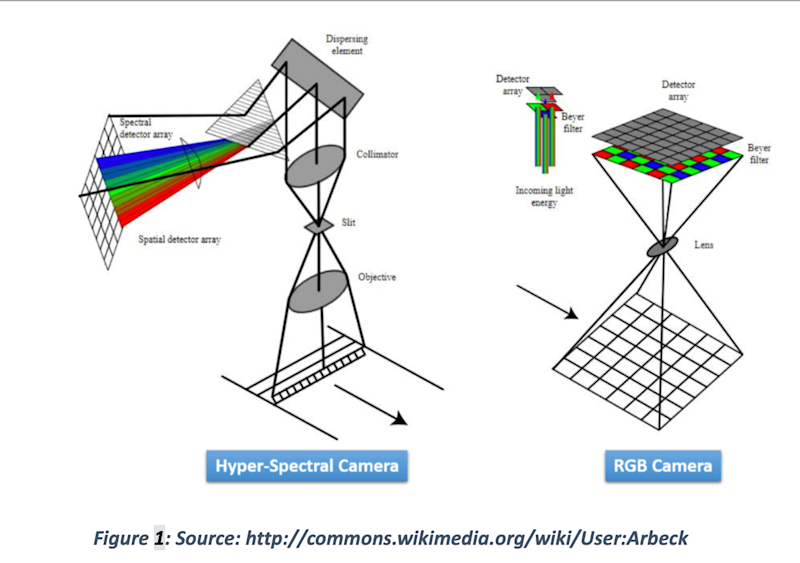

One of the latest innovations in MV is the use of hyper-spectral (HS) cameras, which are able to collect full spectral data of a scene. HS cameras employ a regular CMOS 2D imaging sensor but incorporate a prism or light-scattering device precisely tuned to project a spectrum of the light received into the camera over one of the dimensions of an image sensor. This makes one dimension of the sensor the position dimension (left to right on a conveyor, for example) and the other dimension the spectral dimension (IR-UV). Due to the nature of how HS cameras work, they must either have the scene move underneath them (conveyor belt) or the camera itself must move over the scene (drone/robot carried), and each slice that is imaged is combined with the location of the conveyor belt or drone/robot position to yield a 2D full-spectral representation of a scene. Using full spectrum information of a scene makes the identification and differentiation of materials and substances much easier than using a traditional color image, which contains only red, green, and blue information.

HS has really taken off in automated recycling and food processing. The spectral signature of certain materials or defects of food is very easy to identify within the output of an HS camera system. The location information of these materials or defects may be used by an industrial robot for processing or the removal of defected items, or by a fixed automation system of flippers or air-puffers to eject a target item from the continuous flow of what may appear to be homogenous product according to the naked eye. A great example of this is an Almond Sorter & Grading system that uses a Headwall HS imaging sensor and a FANUC Delta robot to locate and remove foreign bodies (non-almonds) and almonds that have significant chips in them. HS imaging is also used in the agricultural industry by attaching an HS camera to a drone and flying over a large agricultural complex. In this situation, the HS images can be used to easily identify which crops are growing in what fields and whether or not those crops are thriving or struggling.

While 2D imaging provides vast amounts of information and is by far the predominate technology used for MV applications today, there are several different 3D technologies producing near real-time 3D representations of a scene that are starting to be embraced for various manufacturing applications.

3D MV begins with one of several different types of 3D cameras that come from two main categories – passive and active. Passive 3D techniques involve the use of algorithms to analyze the same scene viewed from two or more 2D cameras whose locations are precisely known. By analyzing two or more 2D images, algorithms can find corresponding points in the two or more images that are the same point in the scene. Once a corresponding point is located, its precise XYZ location can be calculated using the precise location of the cameras and calibrated location of the point in each of the images. With passive stereo, there needs to be a certain amount of local variation or texture in order for the corresponding algorithms to function.

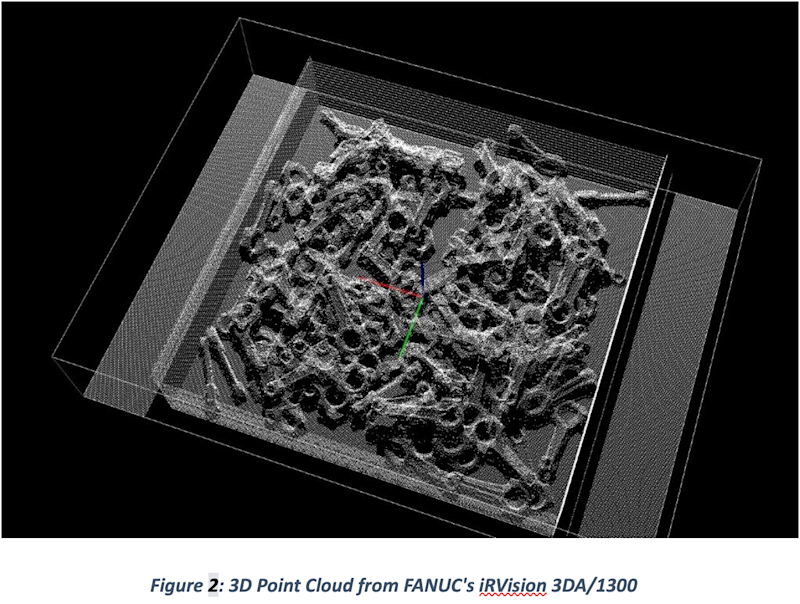

With active stereo, some type of structure or coded light is used to artificially add texture to the image to facilitate correspondence matching, typically resulting in more 3D points being calculated. The output of a 3D camera system is typically referred to as a point cloud or depth image. A point cloud is a series of XYZ values that detail each measured location discovered by the system relative to some reference frame, typically the camera itself. A depth image is a 2D gray scale or color image where the pixel values represent the distance from the camera. White is closer, black is farther, and gray scale is in between. Alternatively, it could be that the color red is closer, blue is farther, and the traditional rainbow colors are in between. There are 3D MV algorithms that are able to analyze a depth image or a point cloud, or sometimes both from the same scene to output the desired information for the application.

MV is used primarily for real-time inspection as well as machine guidance; however, historically, the amount of MV deployed for machine guidance has been relatively low compared to inspection. MV for machine guidance is used to locate parts in real space for some type of programmable manipulator to handle or process. When that machine is a modern industrial robot, this is referred to as vision-guided robotics or VGR. VGR is fast becoming a large niche-sector of the MV market due to its ability to make industrial robots much more flexible. Industrial robots are amazingly repeatable, programmable manipulators that come in all shapes and sizes. A six-axis industrial robot is able to manipulate its tooling in full 3D, meaning that it can achieve any orientation in 3D space within its work envelope. VGR began with 2D MV technology, meaning that a part could be located in two dimensions and one orientation angle, often referred to as X,Y, and R (the angle about the z-axis). The ability for robots to locate parts in 2D provided a lot of advantages to various applications, including higher flexibility and lower deployment costs. Prior to 2D VGR, achieving continuous and repeatable part presentation required expensive custom fixtures for each part style.

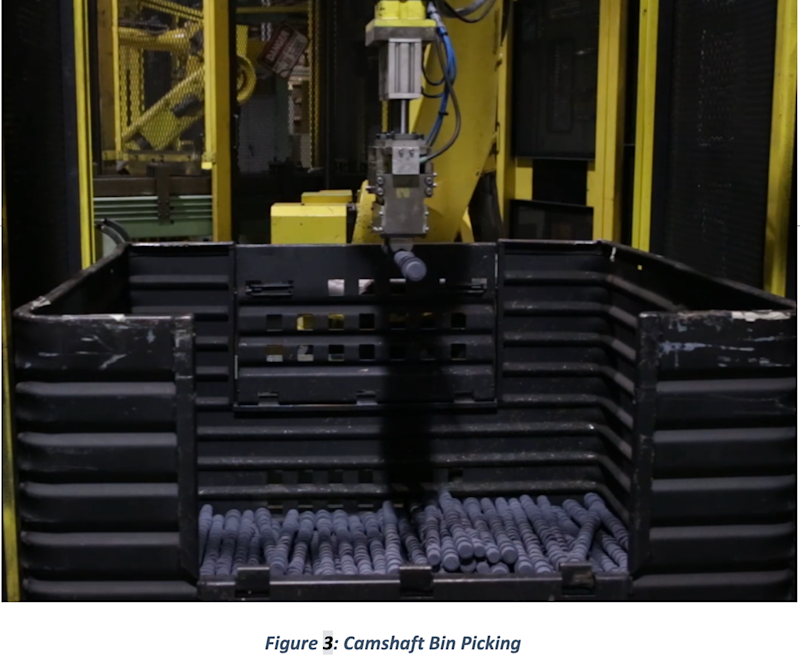

3D VGR is fast becoming a market niche all its own with the advent of many 3D MV technologies being offered by all the major MV camera OEMs, with some robot OEMs offering fully integrated MV technology like FANUC’s iRVision. The killer application for 3D VGR is bin picking, which has been referred to as the holy grail of VGR. A bin picking application involves an industrial six-axis robot removing parts that are located in a bin in either a structured or completely unstructured way. Today, for many manufacturing processes, the only manual process left is loading parts onto the system from a bin. The challenges with bin picking is not only 3D MV but also end of arm tooling. How do you handle a particular part when it can be presented in an infinite number of ways in a bin? Often, a two-step process is used where the robot will extract one or more parts from the bin and then drop them onto a secondary process where 2D MV can be used to provide a precise location for the robot to re-pick and load parts into the next step of the manufacturing process.

A good example of this is a camshaft bin picking process executed by FANUC America for a large automotive OEM. The process was originally robotic but with no vision guidance. The racks were designed to hold the raw camshafts in repeatable stacks that the robot would process relying strictly on repeatability and hard-coded offsets. There were often crashes with this system as the raw, casted camshafts would not always sit flat on top of one another and sometimes the racks were not made to specification. The OEM decided to try two-step bin picking processes using a FANUC R-2000iB/165F six-axis robot and a FANUC iRVision 3D Area Sensor to locate camshafts stacked in a semi-structured way inside a simple steel bin. The robot would use this location to guide its MagSwitch magnetic tooling to extract one camshaft from the bin. The extracted camshaft would then be dropped to a secondary 2D vision table where a fixed FANUC iRVision 2D camera would provide a precise location for the robot to then re-pick the camshaft and load it into the deburring machine. Sometimes, two camshafts would be extracted by the first step, and this would be detected on the secondary 2D vision table, and these parts would be automatically ejected onto the floor as it was not possible to properly deal with two parts on the table. Refinements in the MagSwitch magnetic tooling were done over time which made double picks very rare. Overall, the new system performed much better than the previous system and provided numerous advantages to the OEM’s process. Since the bins held five times as many parts as the racks, the swap frequency was reduced by five, resulting in less fork truck traffic. Once the bin picking process was fine-tuned, the overall operating efficiency of the system went from 70% to 90% or higher, primarily due to the dramatic reduction in system stoppages.

Currently, one of the most exciting and perhaps over-hyped technologies being applied to MV is deep learning.Deep learning is a type of machine learning that fallsinto the very broad category of artificial intelligence or AI.In 2012,a deep convolutional neural network (D-CNN) called AlexNetwas able to identify 1000 different items in 1.2 million images with an error rate of 15.3% for the annual ImageNet Large Scale Visual Recognition Competition.AlexNetwas a dramatic improvement compared to the previous year’s winner of this competition, and since then, the basic approach and design of AlexNet was improved and scaled up to the point that itssuccessors beat human performance at the image classification tasks in 2015.The dramatic success of AlexNet and its successors is the primary reason the term AI is virtually ubiquitous today when talking about any type of new innovation involving computer-assisted decision-making.

A D-CNN works by breaking an image down into smaller and smaller regions of connected data (pixels that are adjacent to each other). The training of a D-CNN is done by showing the network thousands of example images with known classifications. This many examples are required to achieve good results, and the computation required to execute the millions of convolution computations is large. One of the many breakthrough approaches used in AlexNet was the use of graphical processing units for the learning and inference computation. GPUs are much more efficient than CPUs at executing the type of calculations required for D-CNN processing.

Today, D-CNNs are used in manufacturing MV for quality inspection and part identification. While D-CNNs have shown great success at certain tasks, the implementation of a D-CNN is very time consuming. The amount of tagged images required to properly train the network is large, and when the system fails to classify something correctly, there is no way to know why it failed. The only countermeasure is to do more training, and there is no definitive way to know that enough training has been done in order to guarantee 100% success. For these reasons, some industries are slow to adopt D-CNN-based MV.

One example application is a warehouse management system where 3D sensors were being used with traditional MV to identify empty spaces on shelves. A D-CNN approach was implemented using much cheaper 2D cameras, and the resultant error rate was only 0.29% compared to 2.56% for the more expensive 3D approach. Another successful D-CNN application was for a large automotive OEM that wanted to verify that the correct running boards had been installed on finished pickup trucks. Given the large variety of running boards and the small differences between some of them, traditional MV was not successful at this confirmation task, but a D-CNN approach proved to be very accurate and quick to confirm correct installation.

Many MV hardware and software OEMs are beginning to offer D-CNN-based tools in their tool sets to enable traditional MV programmers to incorporate this new technology into their solutions.

Machine vision continues to offer tremendous value propositions to all industries, especially for manufacturing. While deep learning approaches are starting to enable the easy deployment of MV for certain tasks, the best approach to realizing value from MV is to work with professional MV integrators, some of whom are listed on the AIA website. For those interested in becoming a vision professional, or to learn more about the technical aspects of MV, the AIA also offers a Certified Vision Professional training program.